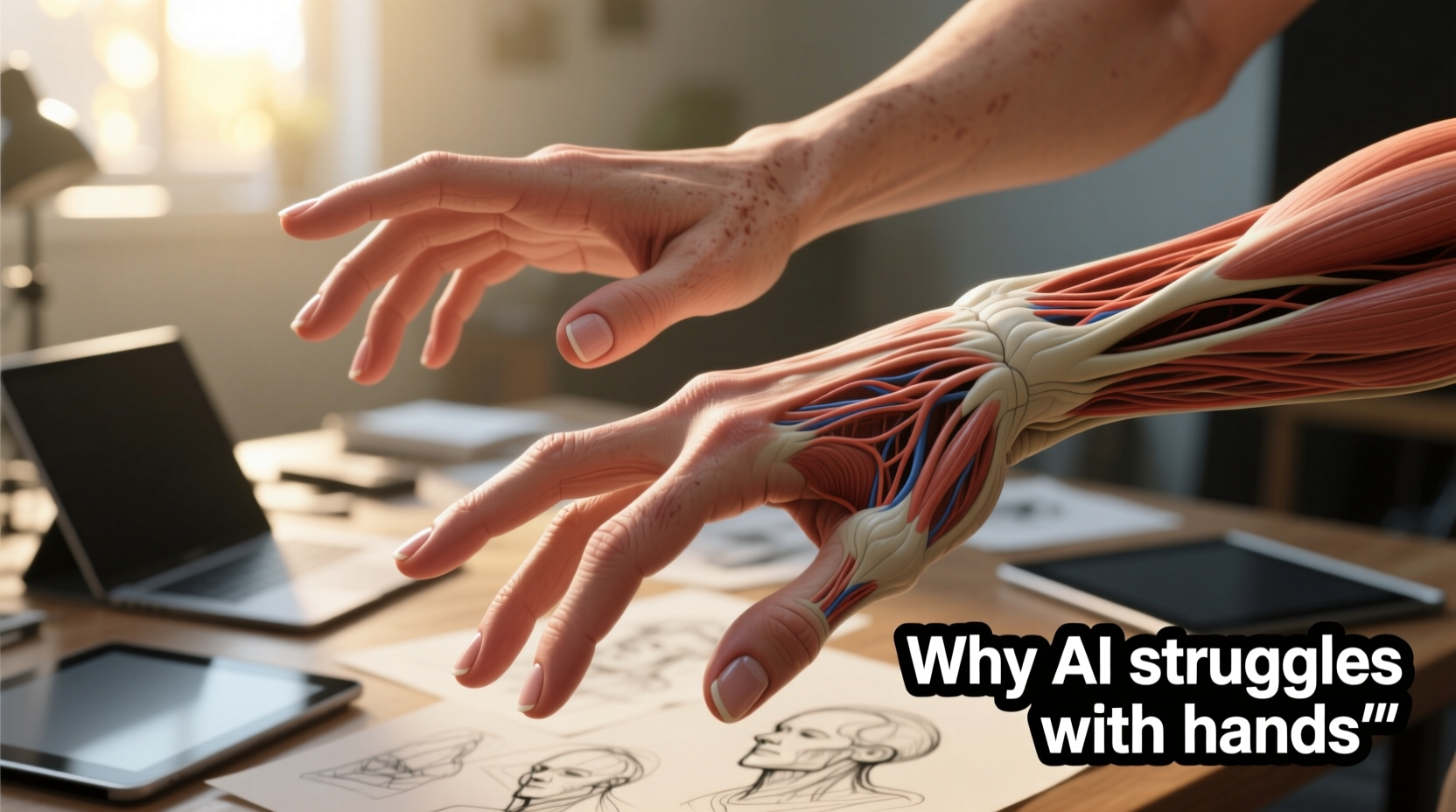

AI-generated art has made astonishing progress in recent years. From photorealistic portraits to surreal dreamscapes, generative models like DALL·E, MidJourney, and Stable Diffusion can produce stunning visuals with minimal input. Yet, despite their sophistication, one glaring flaw persists: hands. Whether splayed awkwardly, fused into unnatural shapes, or sprouting extra fingers, AI-generated hands often look unsettling — or outright wrong. This isn't a minor glitch; it's a symptom of deeper architectural and data-driven challenges within AI systems.

The problem isn't new, but as AI art becomes more mainstream, understanding why hands fail so frequently is essential for artists, developers, and users who rely on these tools. The issue lies not in a lack of processing power or creativity, but in how AI models learn, interpret anatomy, and generalize from limited or biased training data.

Why Hands Are Structurally Complex for AI

Human hands are among the most intricate parts of the body. They consist of 27 bones, numerous joints, tendons, muscles, and skin folds — all capable of near-infinite configurations. Unlike faces, which have relatively consistent proportions and symmetry, hands change shape dramatically based on gesture, angle, lighting, and interaction with objects.

For an AI model trained on static images, capturing this variability is extremely difficult. Most training datasets contain far fewer high-quality, diverse hand images compared to full-body or facial shots. When hands appear in photos, they’re often partially obscured, blurred, or positioned in non-standard ways — making them hard to label and learn from accurately.

Moreover, the spatial relationships between fingers, palms, and wrists must be precisely maintained. A slight misalignment — such as a finger bending backward or merging with another — immediately triggers the \"uncanny valley\" effect, where something looks almost right but deeply off-putting.

Training Data Limitations and Biases

AI models learn by analyzing millions of images scraped from the internet. However, the distribution of hand poses in these datasets is highly skewed. Most images show hands in common positions — typing, holding phones, gesturing in speeches — while rare or complex poses (like playing piano or sign language) are underrepresented.

This imbalance leads to poor generalization. The model hasn’t seen enough variation to understand how fingers should align when forming a fist, pointing, or gripping an object. As a result, it defaults to statistically likely configurations — even if they’re anatomically incorrect.

Additionally, many online images used for training are low-resolution or cropped. Hands at the edge of frames are often cut off, leading the model to associate partial hands with normalcy. In some cases, the AI may generate five fingers on one hand and four on the other simply because it has learned inconsistent patterns from flawed examples.

“Hands are deceptively complex. They require understanding both structure and intention — something current models infer poorly due to fragmented training signals.” — Dr. Lena Torres, Computer Vision Researcher at MIT

Architectural Challenges in Diffusion Models

Modern AI image generators use diffusion models, which start with random noise and gradually refine it into a coherent image based on text prompts. While effective overall, this process struggles with fine-grained details like fingers.

During denoising, the model prioritizes global composition — layout, color, major shapes — before focusing on small features. By the time it reaches hand-level detail, contextual coherence may already be compromised. If the arm position doesn’t logically lead to a plausible hand orientation, the model improvises, often creating impossible geometries.

Another issue is token limitations in the model’s attention mechanism. Text-to-image models divide images into patches and assign attention weights to each. Hands, being small relative to the whole image, receive fewer tokens, meaning less computational focus. This makes them vulnerable to distortion, especially when multiple hands or intricate interactions are involved.

Common Hand Generation Failures

| Fault Type | Description | Frequency in Outputs |

|---|---|---|

| Extra Fingers | Five or more digits per hand | High |

| Fused Digits | Fingers merged into a single mass | Very High |

| Reverse Joints | Fingers bending backward unnaturally | Moderate |

| Missing Parts | Palm or wrist discontinuity | High |

| Asymmetry | Uneven finger count or size between hands | Moderate |

Solutions and Workarounds in Practice

While no AI system currently generates perfect hands consistently, several strategies help mitigate the issue:

- Post-generation editing: Use image editors like Photoshop or GIMP to manually correct hand anatomy after generation.

- ControlNet integration: Tools like ControlNet allow users to input pose skeletons or edge maps, guiding the AI to place hands correctly.

- Prompt engineering: Specify simple, stable hand positions (“palms together,” “hands behind back”) to avoid complex articulation.

- Masking and inpainting: Generate the full image, then mask the faulty hand and regenerate only that region with a corrected prompt.

Mini Case Study: Digital Artist Adapts Workflow

Lena Cho, a concept artist working in game design, initially relied heavily on MidJourney for character ideation. However, she found that nearly 70% of her full-body outputs required hand corrections, slowing her workflow. After experimenting with ControlNet and OpenPose, she began sketching rough hand positions first, converting them into stick-figure guides for the AI. This hybrid approach reduced hand errors by over 80%, allowing her to focus on creativity rather than cleanup.

“I used to dread full-hand shots,” she said. “Now I guide the AI instead of fighting it. It’s not perfect, but it’s usable.”

Future Directions and Emerging Fixes

Researchers are actively addressing the hand problem through targeted improvements:

- Better datasets: Projects like HandDB and SynHand5M aim to provide large-scale, annotated hand images across diverse poses and skin tones.

- Local refinement modules: Some models now include specialized sub-networks trained exclusively on hand regions to improve detail accuracy.

- 3D-aware generation: Integrating 3D hand models into 2D generators helps maintain anatomical plausibility regardless of viewpoint.

- User feedback loops: Systems that learn from user corrections could adapt over time, reducing recurring mistakes.

Companies like Runway ML and Stability AI are testing fine-tuned versions of their models with enhanced hand performance. Early results show promise, though widespread deployment remains months away.

Checklist: Minimizing Hand Errors in AI Art

- ✅ Use clear, simple hand pose descriptions in your prompt

- ✅ Add negative prompts to exclude known issues (e.g., “malformed hands”)

- ✅ Leverage ControlNet or similar tools for pose guidance

- ✅ Generate close-ups separately from full-body images

- ✅ Edit hands manually or via inpainting when needed

- ✅ Test multiple generations to find one with acceptable hand structure

FAQ

Will AI ever generate perfect hands consistently?

It’s likely, but not imminent. Advances in training data, model architecture, and 3D integration will eventually solve the problem. However, achieving 100% accuracy across all poses and contexts may take several more years.

Are some AI models better at hands than others?

Yes. Models fine-tuned on artistic anatomy or integrated with pose control (like Stable Diffusion + ControlNet) perform significantly better than base versions of DALL·E or MidJourney without add-ons.

Why don’t developers just fix this already?

The hand issue stems from fundamental limitations in data quality and model design, not a single bug. Fixing it requires retraining on vast, curated datasets and architectural changes — both resource-intensive processes.

Conclusion

The struggle AI faces with generating realistic hands reveals a critical truth: intelligence isn’t just about pattern recognition. It’s about understanding function, physics, and context. Hands aren’t just shapes — they grasp, point, express emotion, and interact with the world in dynamic ways. Until AI can simulate that depth of understanding, glitches will persist.

Yet, progress is undeniable. With smarter tools, better data, and creative workarounds, users today can already produce compelling art — even if the hands need a little help. The journey toward flawless AI-generated anatomy continues, driven by both technical innovation and human ingenuity.

浙公网安备

33010002000092号

浙公网安备

33010002000092号 浙B2-20120091-4

浙B2-20120091-4

Comments

No comments yet. Why don't you start the discussion?