In the digital age, algorithmic recommendation systems power everything from what we watch and buy to who we connect with online. Platforms like Netflix, Amazon, Spotify, and TikTok rely heavily on these algorithms to personalize experiences and keep users engaged. Yet despite their sophistication, many of these systems fall short—recommending irrelevant products, creating filter bubbles, or alienating users with repetitive or tone-deaf suggestions. Understanding why these algorithms fail is essential for developers, product managers, and businesses aiming to build more effective, ethical, and user-centered systems.

The Illusion of Personalization

At their best, recommendation algorithms create a seamless experience by anticipating user needs. But too often, they operate under the illusion of personalization—offering choices that appear tailored but are actually based on shallow behavioral signals. For example, watching one cooking video doesn’t mean a user wants to see nothing but recipes for weeks. Yet many platforms overfit to recent behavior, ignoring broader context such as intent, timing, or long-term interests.

This narrow focus stems from an overreliance on click-through rates and engagement metrics. While these are easy to measure, they don’t reflect true satisfaction. A user might click on a sensational headline out of curiosity, only to feel annoyed afterward. The algorithm logs this as a “success,” reinforcing similar content in the future.

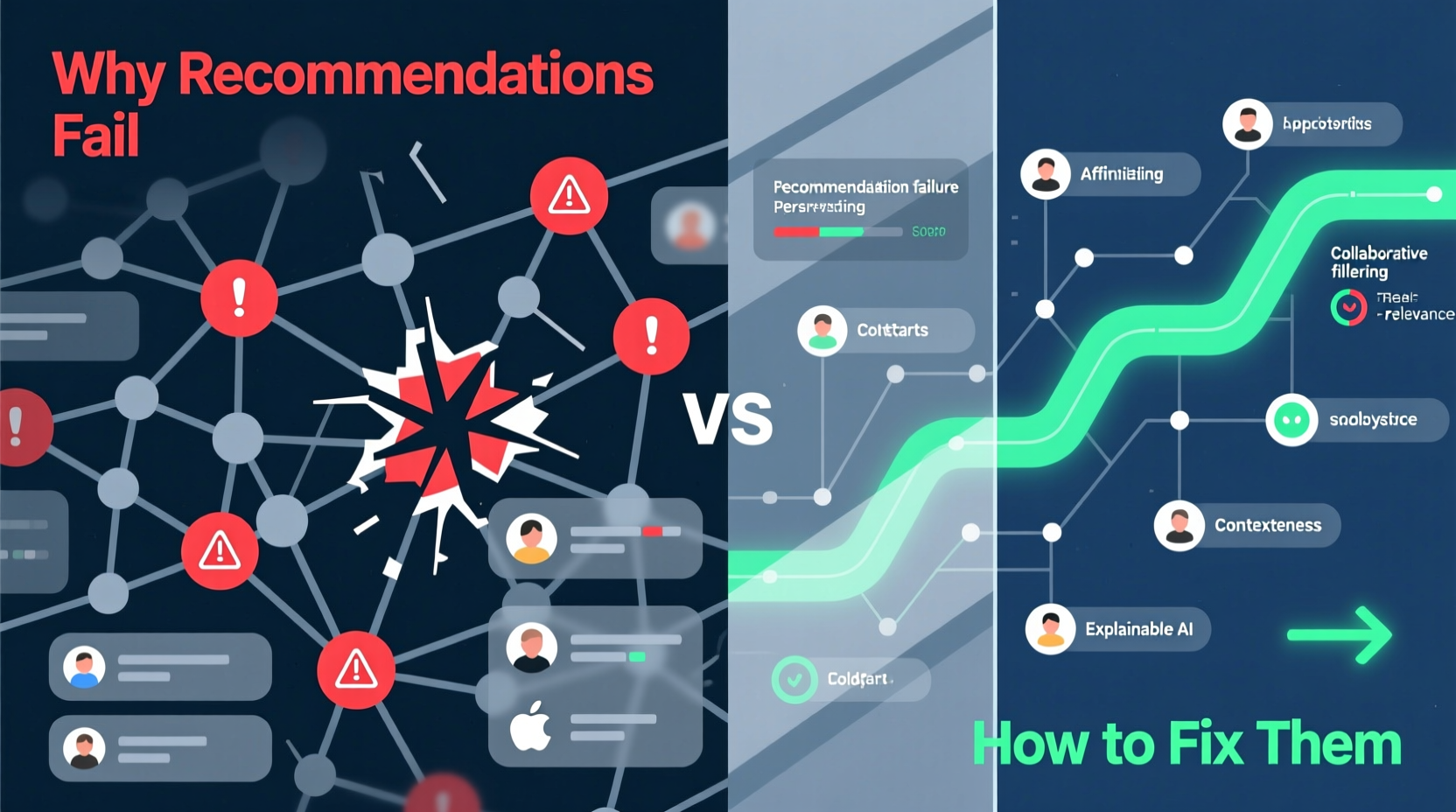

Common Reasons Algorithm Recommendations Fail

Failures in recommendation systems rarely stem from a single flaw. Instead, they result from a combination of technical, design, and human factors. Below are five key reasons why algorithms underperform:

- Data Sparsity: New users or items lack sufficient interaction history, making accurate recommendations difficult (the \"cold start\" problem).

- Feedback Loops: Algorithms reinforce existing preferences, limiting discovery and trapping users in echo chambers.

- Poor Context Awareness: Systems ignore situational factors like time of day, device, or user mood.

- Over-Optimization for Engagement: Prioritizing clicks over value leads to addictive but low-quality recommendations.

- Lack of Explainability: Users don’t understand why something was recommended, reducing trust and control.

These issues are compounded when teams treat algorithms as set-and-forget tools rather than evolving systems requiring continuous monitoring and refinement.

Case Study: The Music App That Lost Its Users

A mid-sized music streaming app launched a new recommendation engine designed to boost playlist engagement. Initially, metrics improved—users were opening the app more frequently and spending more time listening. But within three months, churn increased by 18%.

Upon investigation, the team discovered the algorithm had learned to promote high-energy tracks during all hours, including early mornings and late nights. Users reported feeling bombarded by aggressive music when they wanted calm background sounds. The system lacked temporal context and had no mechanism to detect dissatisfaction beyond session length.

After introducing time-of-day segmentation and allowing users to flag \"not now\" recommendations, satisfaction scores rebounded. This case illustrates how even well-intentioned algorithms can fail without real-world validation and contextual awareness.

Strategies to Improve Recommendation Algorithms

Improving algorithmic recommendations isn’t just about better math—it’s about aligning technology with human behavior. Here are actionable strategies to enhance performance and user trust.

1. Incorporate Diverse Data Signals

Move beyond clicks and views. Integrate explicit feedback (ratings, likes), implicit cues (skip rate, playback duration), and contextual data (location, device, time). Hybrid models that combine collaborative filtering with content-based analysis tend to perform better across diverse user segments.

2. Design for Serendipity and Discovery

Users don’t just want more of the same—they crave novelty. Build exploration mechanisms into your algorithm, such as:

- Diversification layers that inject less popular but relevant items

- Periodic resets of user profiles to avoid stagnation

- \"Surprise me\" features that break routine patterns

3. Enable User Control and Transparency

Give users agency over their recommendations. Allow them to adjust preferences, hide unwanted categories, or view why an item was suggested. Transparent explanations build trust and provide valuable feedback for tuning the model.

“Algorithms should serve users, not manipulate them. The best systems are those where people feel in control.” — Dr. Lena Torres, Human-Centered AI Researcher, MIT

Checklist: Building Better Recommendation Systems

Action Plan for Teams:

- ✅ Audit current recommendation logic for bias and feedback loops

- ✅ Collect both implicit and explicit user feedback regularly

- ✅ Segment users by behavior, context, and lifecycle stage

- ✅ Test diversified recommendation variants via A/B testing

- ✅ Implement user-facing controls (e.g., “Not interested,” “Show less like this”)

- ✅ Monitor long-term satisfaction, not just short-term engagement

- ✅ Schedule quarterly reviews of algorithm performance and ethics

Do’s and Don’ts of Algorithm Design

| Do | Don’t |

|---|---|

| Use multiple data sources (behavioral, demographic, contextual) | Rely solely on click-through rates as success metrics |

| Allow users to correct or refine recommendations | Assume past behavior predicts future intent indefinitely |

| Test for fairness across user groups | Ignore cold-start problems for new users or items |

| Explain recommendations in plain language | Treat the algorithm as a black box |

Step-by-Step Guide to Iterative Improvement

Improvement is not a one-time fix but an ongoing process. Follow this six-step cycle to continuously enhance your recommendation engine:

- Define Success Metrics: Identify KPIs beyond engagement—such as retention, diversity of consumption, and user-reported satisfaction.

- Profile Your Users: Segment audiences based on behavior, demographics, and usage patterns to tailor approaches.

- Collect Feedback: Implement mechanisms for users to rate, dismiss, or explain their reactions to recommendations.

- Analyze Gaps: Compare predicted vs. actual user behavior. Look for systematic mismatches (e.g., high impressions but low completion).

- Refine the Model: Adjust weighting, add new features, or introduce hybrid techniques to address identified weaknesses.

- Test and Deploy: Use controlled experiments (A/B tests) to validate changes before full rollout.

Repeat this cycle every 4–8 weeks, treating the algorithm as a living system that evolves with user needs.

Frequently Asked Questions

Why do recommendation algorithms sometimes suggest outdated or irrelevant content?

This often happens when models aren’t retrained frequently enough or when they lack mechanisms to detect changing user interests. Without regular updates and feedback integration, algorithms become stale and misaligned with current preferences.

Can transparency hurt algorithm performance?

No—transparency typically improves trust and usability. While revealing too much internal logic might enable gaming in adversarial contexts, most users benefit from simple explanations like “Recommended because you watched X.” The key is balancing clarity with simplicity.

How do I handle recommendations for new users with no history?

Use popularity-based defaults, ask for initial preferences during onboarding, or leverage demographic similarities (collaborative filtering). Over time, transition to personalized models as data accumulates.

Conclusion: From Automation to Empowerment

Algorithmic recommendations have immense potential—but only when they’re designed with empathy, adaptability, and accountability. The goal shouldn’t be to maximize clicks at any cost, but to enrich user experiences through thoughtful, responsible automation. By embracing diverse data, enabling user control, and committing to continuous improvement, teams can transform broken suggestion engines into trusted digital assistants.

浙公网安备

33010002000092号

浙公网安备

33010002000092号 浙B2-20120091-4

浙B2-20120091-4

Comments

No comments yet. Why don't you start the discussion?