In the past decade, voice assistants have evolved from novelty features to embedded tools in our daily lives. Whether setting alarms, sending messages, or controlling smart home devices, Siri, Google Assistant, Alexa, and others are designed to simplify interaction with technology. But as expectations rise and artificial intelligence capabilities expand, a growing number of users are asking: are these assistants actually getting better—or are they stagnating, or even regressing?

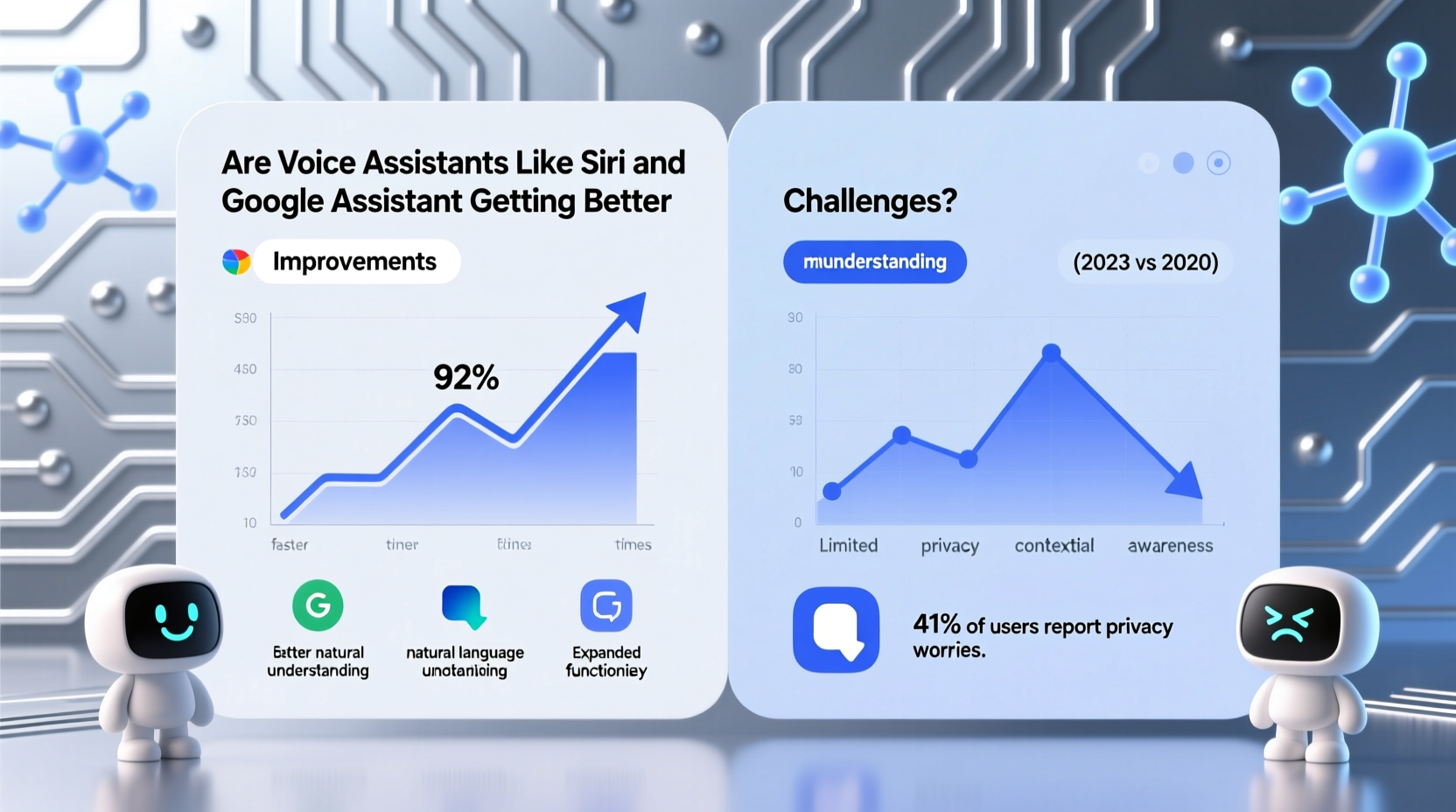

The answer isn’t simple. While technical benchmarks show improvement in speech recognition and natural language understanding, real-world user experiences tell a more nuanced story. Some find their assistants more helpful than ever; others report increased frustration with misinterpretations, limited functionality, and over-reliance on scripted responses.

This article examines the evolution of major voice assistants—particularly Apple’s Siri and Google Assistant—through technological progress, user feedback, industry insights, and practical use cases. We’ll explore what’s improved, where innovation has stalled, and whether we’re moving toward truly intelligent digital companions or just slightly smarter voice-controlled menus.

Technological Advancements Behind Voice Assistants

On paper, voice assistants have made significant strides. Speech recognition accuracy, powered by deep learning models, has improved dramatically since 2010. Google reports that its speech recognition error rate dropped from around 23% in 2013 to under 5% by 2020. Similarly, Apple has invested heavily in on-device processing, allowing Siri to handle sensitive requests without sending data to the cloud.

Modern assistants now leverage large language models (LLMs), contextual awareness, and multi-turn dialogue systems. For example, Google Assistant can maintain context across several queries (“What’s the weather?” followed by “Will I need an umbrella?”) and perform complex chained tasks like booking reservations using third-party integrations.

Apple introduced \"Apple Intelligence\" in 2024, integrating generative AI directly into iOS 18. This allows Siri to understand nuanced requests, summarize notifications, and generate text creatively—all while prioritizing privacy through on-device processing.

Yet, despite these upgrades, many users don’t perceive a dramatic leap in everyday usefulness. Why?

User Experience: Progress vs. Frustration

Behind the scenes, voice assistants are more capable than ever. But user satisfaction doesn’t always follow technical advancement. A 2023 Pew Research study found that only 41% of smartphone users engage with voice assistants daily, and nearly 30% say they’ve stopped using them due to unreliability.

Common complaints include:

- Misunderstanding accents or background noise

- Failing to execute multi-step commands

- Offering irrelevant web search results instead of taking action

- Lack of personalization beyond basic preferences

A recurring issue is the gap between marketing promises and actual performance. For instance, Apple demonstrated advanced Siri capabilities at WWDC 2024, showing it could rephrase emails and prioritize alerts intelligently. However, early beta testers noted delays in rollout and inconsistent behavior across device types.

Meanwhile, Google Assistant, once considered the most advanced, has faced criticism for retreating from proactive features and reducing support for older hardware. In 2023, Google discontinued routines for millions of Nest Hub users without replacement, sparking backlash.

“Voice interfaces are stuck in a middle ground—they’re too complex for simple tasks and too simplistic for complex ones.” — Dr. Lena Patel, Human-Computer Interaction Researcher at MIT

Comparison: Siri vs. Google Assistant (2024)

| Feature | Siri (iOS 18 + Apple Intelligence) | Google Assistant (2024 Update) |

|---|---|---|

| Natural Language Understanding | Improved with LLM integration; handles ambiguity better | Strong contextual parsing; excels in follow-up questions |

| Privacy & On-Device Processing | High—most processing occurs locally | Mixed—relies more on cloud for complex queries |

| Smart Home Integration | Excellent within HomeKit ecosystem | Broad compatibility across brands |

| Proactive Assistance | Limited; improving with Apple Intelligence summaries | Reduced compared to earlier versions |

| Third-Party App Support | Growing slowly; restricted by Apple’s guidelines | Extensive, but inconsistent reliability |

| Voice Naturalness | More expressive voices; supports emotional tone detection | Highly natural-sounding responses with prosody variation |

While both platforms have strengths, neither consistently outperforms the other across all categories. Google maintains an edge in search integration and broad device reach, while Apple leads in privacy and seamless ecosystem coordination.

Real-World Case: The Commuter’s Dilemma

Consider James, a software developer in Chicago who uses his iPhone and Pixel tablet daily. He relies on voice assistants during his commute to manage calendars, reply to messages, and check traffic.

Last year, he found Siri often failed to send replies when Bluetooth audio was connected, misheard names, and couldn’t adjust calendar events unless phrased exactly right. After updating to iOS 18, he noticed improvements: Siri now understands phrases like “Move my 3 PM meeting tomorrow because I’ll be in transit,” and offers to draft a message to the attendee.

However, on his Pixel tablet, Google Assistant began prompting him to use “Gemini” for complex queries—a separate app requiring manual activation. Tasks that used to be one command now require switching apps, breaking flow.

James sums it up: “Siri finally feels like it’s catching up, but Google’s fragmentation makes things messier. I’m not sure either is truly ‘smarter’—just differently broken.”

Signs of Improvement—and Warning Flags

Certain indicators suggest voice assistants are progressing:

- Better accent and dialect recognition: Both Google and Apple now train models on diverse speech patterns, improving accessibility.

- On-device AI: Faster response times and enhanced privacy reduce dependency on internet connectivity.

- Integration with generative AI: Summarizing emails, rewriting text, and answering layered questions are becoming standard.

But red flags remain:

- Feature fragmentation: Capabilities vary widely between devices, operating systems, and regions.

- Over-promising, under-delivering: Demos at tech keynotes often showcase ideal scenarios that don’t reflect real-world conditions.

- Decline in proactive help: Early versions of Google Assistant would suggest leaving early for appointments based on traffic. Many such features have been scaled back.

Expert Outlook: Where Do Voice Assistants Go From Here?

Industry leaders agree that voice assistants must evolve beyond command-response mechanics to become anticipatory, adaptive partners.

“The future isn’t just about understanding words—it’s about understanding intent, context, and emotion. Today’s assistants are still largely transactional.” — Dr. Rajiv Mehta, AI Product Lead at Stanford HAI

Companies are experimenting with multimodal interaction—combining voice, vision, and gesture. Apple’s Vision Pro, for example, allows users to control spatial computing environments using Siri with hand gestures. Google is testing Assistant responses that adapt tone based on user stress levels detected via wearable biometrics.

Still, challenges persist. Accents, ambient noise, cultural context, and individual communication styles continue to trip up even the most advanced systems. And unlike chatbots powered by full LLMs (like ChatGPT), voice assistants operate under strict latency, power, and privacy constraints—limiting how much AI they can run locally.

How to Maximize Your Voice Assistant’s Potential Today

Regardless of platform, you can improve your experience with a few strategic adjustments:

Step-by-Step Guide to Optimizing Voice Assistant Performance

- Update your OS regularly: New AI features often arrive with system updates, not standalone app patches.

- Train your assistant: Use consistent phrasing and correct misunderstandings when possible (e.g., “No, I meant John with a 'J'”).

- Enable relevant integrations: Link calendars, messaging apps, and smart home devices to expand functionality.

- Use wake-word alternatives: Try holding the side button (iPhone) or swiping up (Android) for quieter environments.

- Review voice history: Check past interactions in settings to identify recurring errors or limitations.

Checklist: Getting the Most Out of Siri or Google Assistant

- ✅ Enabled on-device processing

- ✅ Connected to key apps (calendar, email, notes)

- ✅ Set preferred contact nicknames (“Call Mom”)

- ✅ Customized voice and speed settings

- ✅ Disabled unused skills/routines to reduce clutter

- ✅ Tested complex commands monthly to track progress

Frequently Asked Questions

Why does my voice assistant misunderstand me sometimes?

Even advanced systems struggle with background noise, rapid speech, regional accents, or uncommon vocabulary. Speaking clearly and minimizing distractions improves accuracy. Some assistants allow you to retrain voice models for better recognition.

Is Siri really getting better in iOS 18?

Yes—Apple Intelligence brings meaningful upgrades. Siri now handles ambiguous references (“Send her the file” where “her” refers to the last person mentioned), edits text creatively, and acts across apps. However, availability is limited to A17+ devices, excluding older iPhones.

Should I switch from Google Assistant to another AI tool?

If you want deeper conversational AI, consider using Gemini (Google’s LLM) alongside Assistant. But for hands-free control and smart home tasks, Google Assistant remains one of the most reliable options—despite reduced investment in recent years.

Conclusion: Progress Is Real, But Uneven

So, are voice assistants like Siri and Google Assistant getting better or worse? The evidence points to cautious improvement—with caveats.

Technologically, they are undeniably more advanced. Speech recognition is sharper, contextual awareness is growing, and integration with generative AI opens new possibilities. Siri, long criticized for stagnation, is finally closing the gap. Google Assistant, while facing internal restructuring, still offers robust functionality across Android and smart displays.

Yet user experience lags behind potential. Fragmented rollouts, inconsistent device support, and shrinking proactive features create friction. For many, voice assistants remain useful but unreliable—more convenience than necessity.

The next phase depends on whether companies prioritize seamless, intelligent assistance over feature bloat and branding shifts. True progress won’t come from faster responses alone, but from assistants that understand not just what we say, but why we say it.

浙公网安备

33010002000092号

浙公网安备

33010002000092号 浙B2-20120091-4

浙B2-20120091-4

Comments

No comments yet. Why don't you start the discussion?