Smartphone photography has evolved dramatically over the past decade, with one feature standing out as both revolutionary and controversial: simulated depth-of-field effects. Apple’s Portrait Mode and Samsung’s Live Focus are flagship tools designed to mimic professional DSLR-style background blur—bokeh—but questions remain. Are these features powered by genuine computational photography breakthroughs, or are they just clever filters masking hardware limitations?

The answer lies somewhere in between. While both systems use advanced algorithms and multiple cameras, their approaches differ significantly in execution, consistency, and realism. Understanding how each works—and where they succeed or fall short—can help users make informed decisions about which device delivers better portrait results.

How Portrait Mode and Live Focus Actually Work

At their core, both iPhone’s Portrait Mode and Samsung’s Live Focus rely on computational photography. Instead of using a large sensor and wide aperture lens like traditional cameras, smartphones simulate depth by combining data from dual (or triple) rear cameras and machine learning models.

Apple introduced Portrait Mode with the iPhone 7 Plus in 2016, leveraging its telephoto and wide-angle lenses to estimate depth. The system uses facial recognition, edge detection, and neural networks via the A-series chip’s Neural Engine to separate the subject from the background. Once segmented, it applies a synthetic blur that mimics optical defocus.

Samsung’s Live Focus, launched around the same time on Galaxy S8+, takes a similar multi-camera approach but often incorporates additional sensors like ToF (Time-of-Flight) on higher-end models. This sensor measures distance more accurately than stereo disparity alone, improving depth mapping precision. Unlike Apple, Samsung allows real-time preview of the blur effect before capture, giving users control over intensity and style.

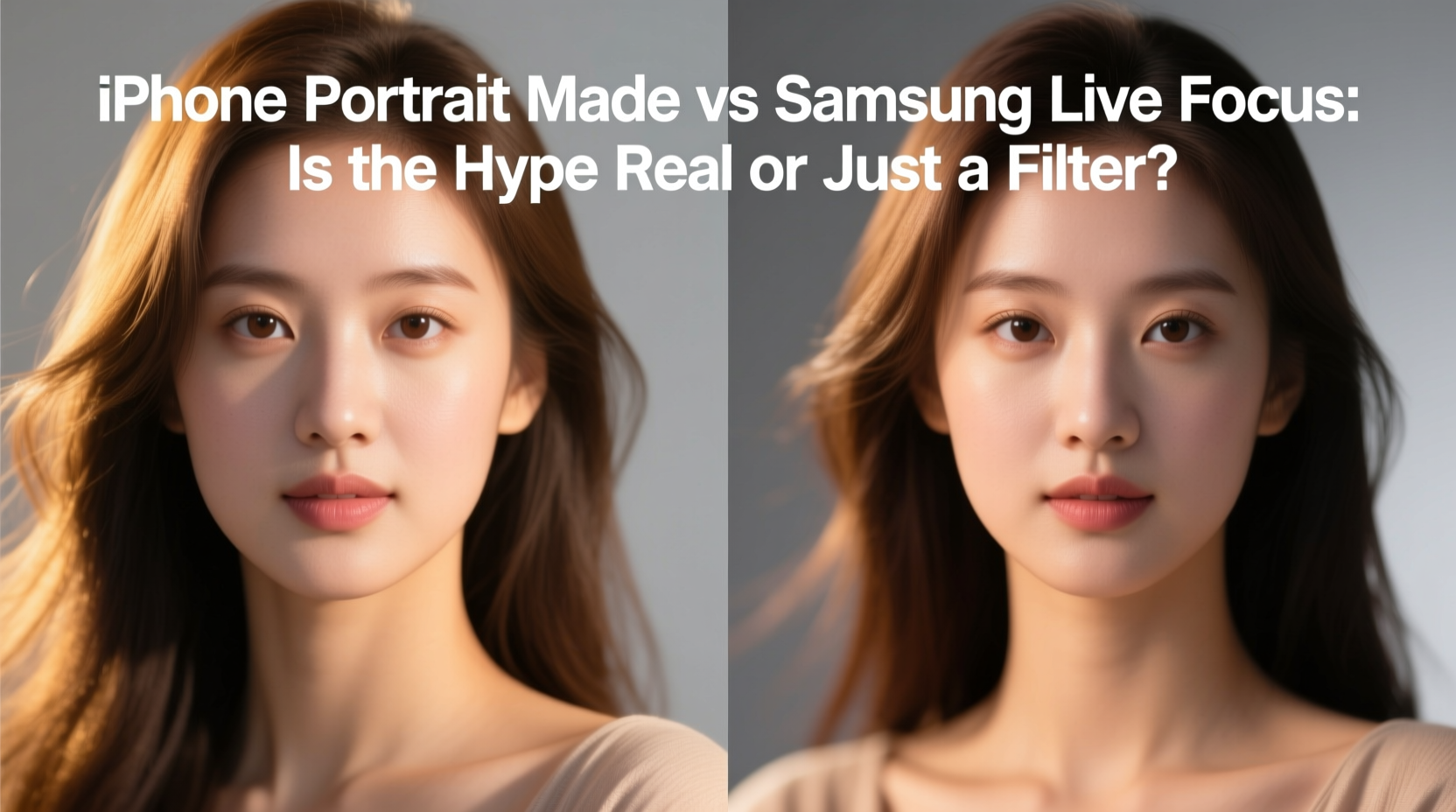

Image Quality and Realism: A Side-by-Side Analysis

The true test of these modes isn’t technical specs—it’s how photos look to the human eye. Real bokeh isn’t uniform; it varies based on lens shape, light sources, and focal plane curvature. Simulating this digitally is challenging.

iPhones tend to produce smoother, more natural-looking blurs. Apple prioritizes accuracy in edge detection, especially around fine details like hair, eyelashes, and glasses. Recent models (iPhone 13 and later) use Photographic Styles and improved segmentation to preserve skin tones and textures while avoiding the “cut-out” look.

Samsung devices often apply a stronger, more dramatic blur. While visually striking, this can sometimes appear artificial, particularly in low light or complex scenes. However, Samsung offers greater customization: users can adjust blur strength post-capture and even change lighting effects (e.g., studio, spotlight, color tone), adding creative flexibility absent in iOS.

“Computational bokeh has reached a point where casual viewers can’t distinguish it from optical blur—when conditions are ideal. But under stress, the seams show.” — Dr. Lena Park, Computational Imaging Researcher at MIT Media Lab

Comparison Table: Key Features and Capabilities

| Feature | iPhone Portrait Mode | Samsung Live Focus |

|---|---|---|

| Real-Time Preview | No (blur applied after capture) | Yes (adjustable pre-shot) |

| Post-Capture Blur Adjustment | Yes (on supported models) | Yes (with intensity and style options) |

| Edge Detection Accuracy | Excellent (especially on faces/hair) | Good, struggles with fine strands |

| Supported Subjects | People, pets, objects (iOS 16+) | Mainly people, limited pet support |

| Low-Light Performance | Strong (uses Night Mode integration) | Variable (depends on model/sensor) |

| Customization Options | Limited (natural focus priority) | Extensive (lighting styles, blur levels) |

A Real-World Example: Wedding Guest Photography

Consider Sarah, a guest at a friend’s outdoor wedding. She wants to capture heartfelt portraits without carrying extra gear. Using her iPhone 15 Pro, she switches to Portrait Mode. The sunlight creates soft shadows, and the Neural Engine quickly locks focus on the bride’s face. Even with wind-blown hair, the background trees blur smoothly, preserving detail in her dress and eyes.

Meanwhile, Mark, using a Galaxy S24 Ultra, activates Live Focus with a “Studio Light” effect. He likes being able to tweak the blur intensity before shooting. However, when photographing the groom adjusting his tie near a patterned wall, the algorithm misreads part of the fabric as background, creating a halo-like artifact around his shoulder.

In this scenario, Apple’s conservative, hardware-integrated approach yields more reliable results, while Samsung’s creative freedom comes with occasional processing errors. Neither failed entirely—but consistency favored the iPhone.

Common Pitfalls and How to Avoid Them

Even advanced systems struggle under certain conditions. Movement, low contrast, or cluttered backgrounds can confuse depth sensors. Here’s how to maximize success:

- Stay within optimal range (1–2 feet for close-ups, up to 6 feet for full-body).

- Avoid subjects wearing hats or glasses with reflective surfaces.

- Use consistent lighting—backlighting helps separate subject from background.

- Don’t shoot through fences, glass, or transparent objects.

- Hold steady for 1–2 seconds after capture to allow full processing.

Step-by-Step Guide to Capturing Better Portrait Shots

- Switch to Portrait Mode – Open camera app and select Portrait (iPhone) or switch to Live Focus (Samsung).

- Check Lighting – Position subject so light falls from the side or front; avoid harsh overhead sun.

- Maintain Distance – Keep subject at least 8 inches from background and within 8 feet of the camera.

- Frame the Shot – Center subject briefly to let phone lock focus and depth map.

- Capture with Stability – Hold still during and immediately after shutter press.

- Edit Afterward – Adjust blur level (if supported) and exposure in gallery editor.

Frequently Asked Questions

Is Portrait Mode just a blur filter?

No. While the final effect resembles a simple blur, it’s based on actual depth mapping using multiple cameras and AI segmentation. It selectively blurs only the background, preserving sharpness on the subject—a far cry from applying a global filter.

Can Live Focus work on non-human subjects?

Limitedly. Samsung primarily optimizes Live Focus for human faces. Some newer models recognize pets, but object recognition lags behind Apple’s implementation, which supports flowers, toys, and everyday items in iOS 16 and later.

Why does my iPhone say “Lighting Not Available” sometimes?

This occurs when ambient light is too low or uneven for accurate depth estimation. Ensure adequate illumination or switch to Night Mode Portrait if available (iPhone 12 and later).

Final Verdict: Hype or Legit?

The hype around Portrait Mode and Live Focus is justified—but with caveats. These aren’t magic filters slapped onto flat images. They represent years of progress in AI, sensor fusion, and on-device processing. When conditions align, both systems produce results indistinguishable from entry-level mirrorless cameras.

That said, calling them “equal to professional gear” oversells reality. They excel in controlled environments but falter with motion, transparency, or extreme lighting. The iPhone leans toward reliability and naturalism; Samsung favors creativity and user control. Your preference depends on whether you value consistency or customization.

浙公网安备

33010002000092号

浙公网安备

33010002000092号 浙B2-20120091-4

浙B2-20120091-4

Comments

No comments yet. Why don't you start the discussion?