Replication is a cornerstone of modern data systems, enabling consistency, availability, and fault tolerance across distributed environments. Whether in databases, cloud storage, or scientific research, replication ensures that data or processes are duplicated to maintain integrity and performance. Yet, despite its reliability goals, replication is not immune to failure. Replication errors—instances where copied data diverges, fails to synchronize, or becomes inconsistent—can compromise system integrity, lead to data loss, or disrupt operations. Understanding where these errors occur, how they manifest, and why they happen is essential for maintaining robust systems.

Where Replication Errors Occur

Replication takes place across multiple domains, each with unique challenges. The most common environments prone to replication errors include:

- Distributed Databases: Systems like MySQL, PostgreSQL, MongoDB, and Cassandra replicate data across nodes. Network partitions, latency, or node failures can interrupt synchronization.

- Cloud Storage Services: Platforms such as AWS S3, Google Cloud Storage, and Azure Blob Storage use geo-replication. Errors may arise from misconfigured policies or throttling.

- Version Control Systems: Tools like Git rely on replication between local and remote repositories. Conflicts emerge during merges or when branches diverge.

- Scientific Experiments: In research, replication refers to repeating studies to validate results. Human error, flawed methodology, or publication bias can invalidate outcomes.

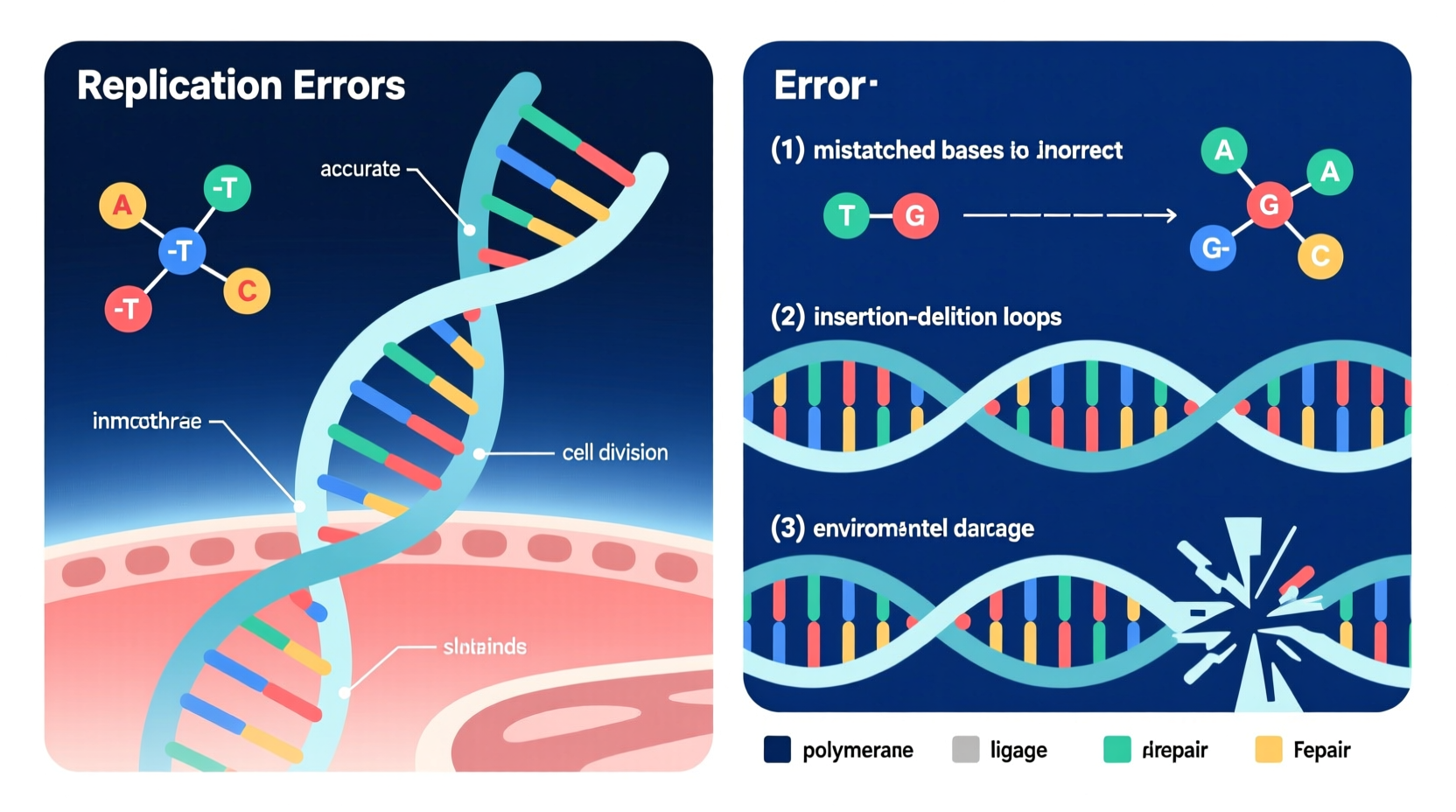

- Biological Systems: DNA replication in cells is a natural process; mutations due to enzyme errors or environmental stressors lead to genetic disorders.

Each context introduces different points of failure, but the underlying theme remains: any system relying on duplication is vulnerable when synchronization breaks down.

How Replication Errors Manifest

Replication errors don’t always appear as outright system crashes. More often, they present subtly, making them harder to diagnose. Common symptoms include:

- Data Inconsistency: Records differ between primary and replica databases.

- Replication Lag: Delay in applying changes from the source to the target.

- Failed Transactions: Write operations succeed on the master but fail on replicas.

- Split-Brain Scenarios: Two nodes believe they are the primary, leading to conflicting updates.

- Checksum Mismatches: Binary logs or file hashes don’t align between systems.

In software development, a developer might push code to a remote repository only to find merge conflicts upon pull—this is a form of replication error at the version control level. In databases, an e-commerce site might display outdated inventory because the read replica hasn't synced with the latest order data.

Why Replication Errors Happen: Root Causes

The causes of replication errors are multifaceted, often stemming from technical, environmental, or human factors. Below are the primary drivers:

- Network Instability: Packet loss, high latency, or temporary outages prevent timely data transfer.

- Configuration Drift: Replica servers may run different software versions or have mismatched settings.

- Hardware Failures: Disk corruption or memory faults on a replica node can halt replication threads.

- Write Conflicts: In multi-master setups, simultaneous writes to the same record cause race conditions.

- Binary Log Corruption: MySQL and similar systems rely on binary logs; if these become corrupted, replication halts.

- Human Error: Accidental deletion of replication slots, incorrect SQL statements, or manual intervention without safeguards.

- Resource Exhaustion: High CPU, memory pressure, or disk I/O bottlenecks slow or stall replication.

“Over 60% of database outages involving replication stem from configuration mismatches or network-related delays.” — Dr. Lena Torres, Senior Database Architect at CloudScale Inc.

Step-by-Step Guide to Diagnose and Resolve Replication Errors

When replication fails, a structured approach minimizes downtime and prevents data divergence. Follow this timeline:

- Verify Replication Status: Use commands like

SHOW SLAVE STATUS\\G(MySQL) orrs.status()(MongoDB) to check error codes and lag. - Review Logs: Examine error logs on both master and replica for specific failure messages (e.g., “Could not connect to master” or “Duplicate entry”).

- Assess Network Connectivity: Test latency and packet loss between nodes using

ping,traceroute, ortelnet. - Check Configuration: Confirm server IDs, binlog formats, and replication filters match across nodes.

- Restart Replication Thread: If safe, stop and restart the slave thread after resolving the root cause.

- Synchronize Data: If divergence is significant, reseed the replica using backups or logical dumps.

- Implement Monitoring: Set up alerts for replication lag, thread status, and checksum mismatches.

Prevention Checklist: Minimizing Future Replication Errors

Proactive maintenance reduces the likelihood of replication breakdowns. Use this checklist regularly:

- ✅ Enable automated monitoring for replication lag and thread health

- ✅ Enforce consistent configurations across all nodes

- ✅ Use checksums or hash verification for critical data transfers

- ✅ Implement heartbeat mechanisms between master and replicas

- ✅ Schedule regular failover drills to test recovery procedures

- ✅ Limit direct writes to replicas unless in multi-master mode

- ✅ Maintain up-to-date documentation of replication topology

Real-World Example: E-Commerce Outage Due to Replication Lag

A mid-sized online retailer experienced a critical outage during a flash sale. Customers reported seeing items as “in stock” on product pages, only to encounter “out of stock” errors at checkout. Investigation revealed that the read replicas used for frontend queries were lagging behind the primary database by over five minutes due to excessive load. While orders were being processed and inventory reduced on the master, replicas continued serving stale data.

The team resolved the issue by temporarily routing all read traffic to the master and scaling up replica resources. Post-incident, they implemented query throttling, added auto-scaling for replicas, and introduced real-time lag monitoring with Slack alerts. This case underscores how replication errors, even when subtle, can directly impact user experience and revenue.

Do’s and Don’ts of Managing Replication

| Do | Don’t |

|---|---|

| Use row-based replication for better accuracy in complex transactions | Assume replication is real-time—always account for potential lag |

| Regularly verify data consistency with checksum tools | Allow untested schema changes on the master without replicating to staging first |

| Document your replication architecture and failover process | Ignore replication warnings—treat them as critical alerts |

| Test disaster recovery scenarios quarterly | Run long-running transactions on the master during peak hours |

Frequently Asked Questions

What is the difference between synchronous and asynchronous replication?

Synchronous replication ensures that data is written to both the primary and replica before confirming success, guaranteeing consistency but increasing latency. Asynchronous replication confirms the write on the primary immediately and replicates later, offering better performance but risking data loss if the primary fails before sync.

Can replication errors cause data loss?

Yes. If a replica fails and isn’t restored from a recent backup, or if binary logs are purged prematurely, data may be irrecoverable. Misconfigured replication can also lead to unintended overwrites or truncation.

How do I know if my replication is healthy?

Monitor key metrics: replication lag (should be near zero), thread running status (IO and SQL threads should be “Yes”), and absence of error messages in logs. Automated tools like Prometheus with exporters or native cloud monitoring dashboards help maintain visibility.

Conclusion: Building Resilience Against Replication Errors

Replication errors are not a matter of if, but when. Systems will face network hiccups, hardware wear, or human oversight. The key lies in preparation, vigilance, and rapid response. By understanding where replication occurs, recognizing early signs of failure, and implementing robust monitoring and recovery practices, organizations can maintain data integrity and service continuity.

浙公网安备

33010002000092号

浙公网安备

33010002000092号 浙B2-20120091-4

浙B2-20120091-4

Comments

No comments yet. Why don't you start the discussion?