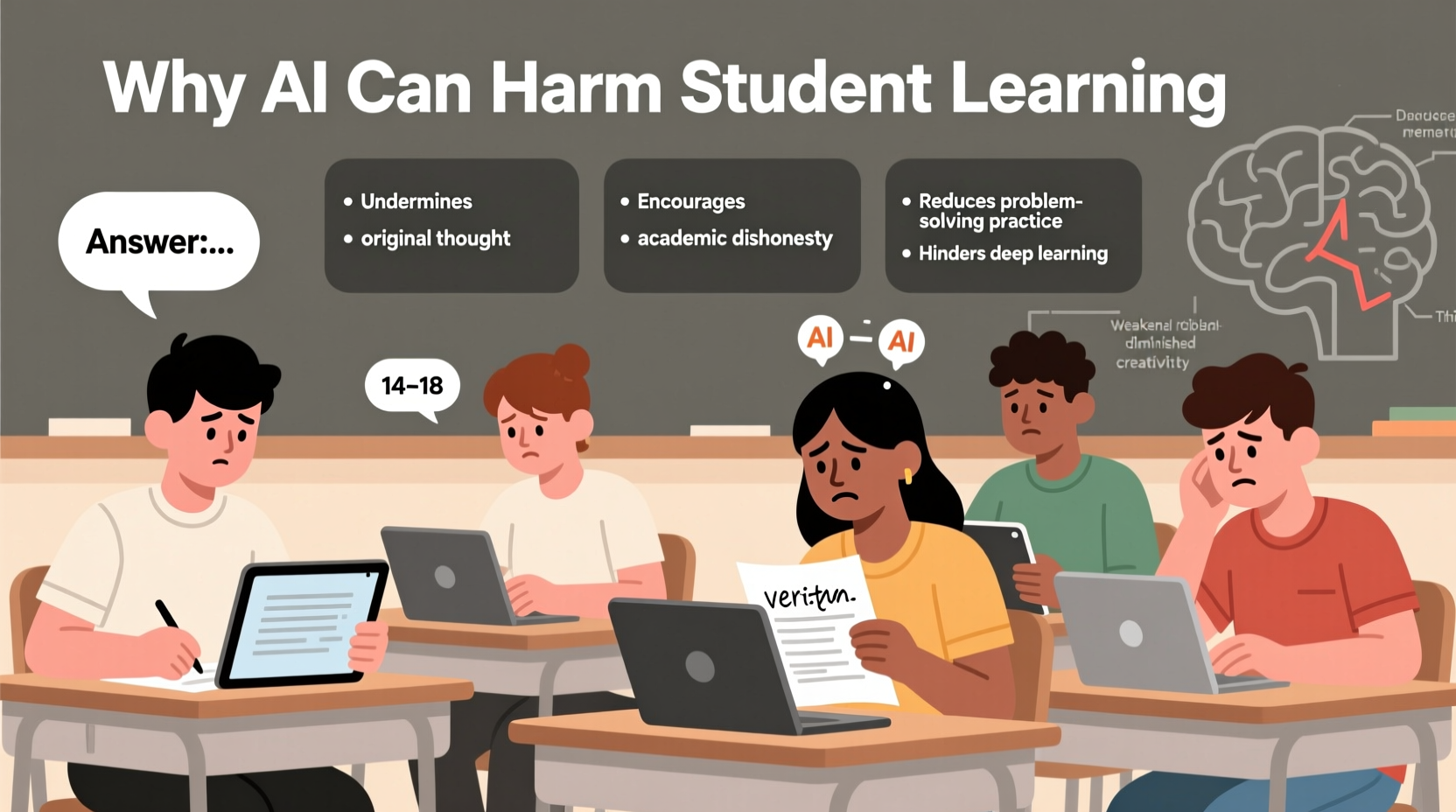

Artificial intelligence has rapidly integrated into classrooms, homework platforms, and study tools. While AI offers undeniable benefits—such as personalized tutoring, instant feedback, and accessibility—it also carries significant risks when used without caution. For students, especially those still developing cognitive and ethical frameworks, unchecked reliance on AI can undermine core educational goals. From weakening critical thinking to enabling academic dishonesty, the unintended consequences are growing more evident. This article examines the key downsides of AI in student learning, offering insights and practical guidance for responsible use.

Erosion of Critical Thinking and Problem-Solving Skills

One of the most concerning impacts of AI on students is its tendency to shortcut deep cognitive engagement. When learners rely on AI to generate essays, solve math problems, or summarize readings, they bypass the mental effort required to analyze, synthesize, and evaluate information. Over time, this passive consumption weakens their ability to think independently.

For example, a student using an AI tool to write a history essay may receive a well-structured response but miss the opportunity to form original arguments, weigh evidence, or confront conflicting interpretations. The process of struggling with ideas—frustrating as it may be—is essential for intellectual growth.

“Learning isn’t just about getting the right answer. It’s about understanding how to get there.” — Dr. Linda Chen, Cognitive Psychologist and Education Researcher

Academic Integrity and the Rise of Undetectable Cheating

AI-powered writing tools can produce human-like text that evades traditional plagiarism detectors. This capability makes it easier than ever for students to submit work that isn’t their own—sometimes without even realizing it crosses ethical lines.

Institutions are struggling to keep up. A 2023 survey by the International Center for Academic Integrity found that over 58% of high school and college students admitted using AI to complete assignments, with nearly one-third unsure whether doing so constituted cheating.

The problem extends beyond dishonesty. When students outsource their thinking, they lose the chance to build confidence in their abilities. They may pass courses, but they arrive at exams or real-world challenges unprepared.

Common Ethical Gray Areas in AI Use

| Situation | Risk Level | Why It’s Problematic |

|---|---|---|

| Using AI to rewrite your draft | Low-Moderate | Acceptable if original ideas are yours; risky if over-edited |

| Generating full essays from prompts | High | Undermines authorship and learning process |

| Letting AI solve homework problems | High | Prevents mastery of problem-solving techniques |

| Using AI to study or quiz yourself | Low | Constructive when reinforcing self-learned material |

Overdependence and Diminished Self-Efficacy

When students grow accustomed to AI providing instant answers, they begin to doubt their own capabilities. This phenomenon, known as \"cognitive offloading,\" reduces motivation to persist through difficult tasks. Why struggle with a calculus problem for 20 minutes when an app solves it in seconds?

But struggle is where learning happens. Educational psychologist Albert Bandura emphasized the importance of self-efficacy—the belief in one’s ability to succeed. Students who rely too heavily on AI may develop learned helplessness, expecting external tools to rescue them rather than trusting their own judgment.

Mini Case Study: The Overconfident Student

Jamal, a sophomore in college, used an AI assistant to complete his weekly biology assignments. He consistently received high grades and assumed he understood the material. When midterm exams arrived—no AI allowed—he scored poorly for the first time. His professor noted: “You’re relying on tools to carry you, but exams test your mind, not your apps.” Jamal had to re-enroll in a remedial course, losing both time and confidence.

Data Privacy and Surveillance Concerns

Many AI-powered educational platforms collect extensive data on student behavior: typing patterns, response times, search history, and even emotional cues inferred from language. While companies claim this data improves personalization, it raises serious privacy issues.

Students often don’t understand what they’re agreeing to when they sign into AI tutoring systems. Their digital footprints can be stored indefinitely, shared with third parties, or used to profile learning behaviors in ways that may affect future opportunities.

- Some AI platforms track keystroke dynamics to detect “cheating” during exams.

- Others use predictive analytics to flag students as “at-risk,” potentially influencing teacher perceptions unfairly.

- Free tools may monetize user data through targeted advertising or research partnerships.

Lack of Accountability and Misinformation Risks

AI models are not infallible. They can generate confident-sounding but incorrect or misleading information—a phenomenon known as “hallucination.” Students who accept AI outputs without verification risk internalizing false facts.

For instance, an AI might confidently state that Napoleon Bonaparte led troops in World War I or that photosynthesis occurs in animal cells. Without fact-checking skills, students may repeat these errors in papers or discussions, damaging their credibility.

Moreover, because AI lacks moral reasoning, it won’t warn students about biased, offensive, or inappropriate content it generates. A student researching sensitive topics could unknowingly produce work containing harmful stereotypes or ethically questionable arguments.

Expert Insight on AI Limitations

“AI doesn’t understand truth—it predicts plausible sequences of words. That’s a dangerous gap for learners who assume authority in every output.” — Dr. Rajiv Mehta, Computer Science and Ethics Professor at MIT

Practical Checklist for Responsible AI Use

To harness AI’s benefits while minimizing harm, students should follow clear guidelines. Here’s a checklist to promote ethical and effective use:

- Use AI for idea generation, not final content creation.

- Cite AI assistance when required by your institution.

- Always verify AI-generated facts with credible sources.

- Limit AI help on foundational assignments to ensure skill development.

- Avoid submitting AI-written work as your own unless explicitly permitted.

- Review privacy settings and avoid sharing sensitive personal information.

- Discuss AI use with teachers to understand classroom policies.

Frequently Asked Questions

Is using AI to write my essay considered cheating?

It depends on your school’s policy. If you submit AI-generated text as your own original work without disclosure, it typically violates academic integrity rules. Always check with your instructor and cite AI use when appropriate.

Can teachers tell if I used AI?

Not always. While some detection tools exist, they are unreliable and prone to false positives. However, inconsistencies in writing style, lack of depth, or sudden improvements in quality may raise suspicion. The best approach is honesty and transparency.

How can I use AI without harming my learning?

Use AI as a supplement, not a substitute. Ask it to explain difficult concepts, generate practice questions, or provide feedback on your drafts—but only after you’ve attempted the work yourself.

Conclusion: Balancing Innovation with Responsibility

Artificial intelligence is reshaping education, but its integration must be thoughtful and guided by pedagogical principles. For students, the convenience of AI comes with hidden costs: weakened thinking skills, ethical dilemmas, and long-term dependency. The goal of education isn’t just to produce correct answers, but to cultivate capable, independent minds.

By setting boundaries, verifying outputs, and prioritizing active learning, students can use AI as a tool—not a crutch. Educators, parents, and institutions must also play a role in establishing clear guidelines and fostering digital literacy. The future of learning depends not on how smart the machines are, but on how wisely we choose to use them.

浙公网安备

33010002000092号

浙公网安备

33010002000092号 浙B2-20120091-4

浙B2-20120091-4

Comments

No comments yet. Why don't you start the discussion?