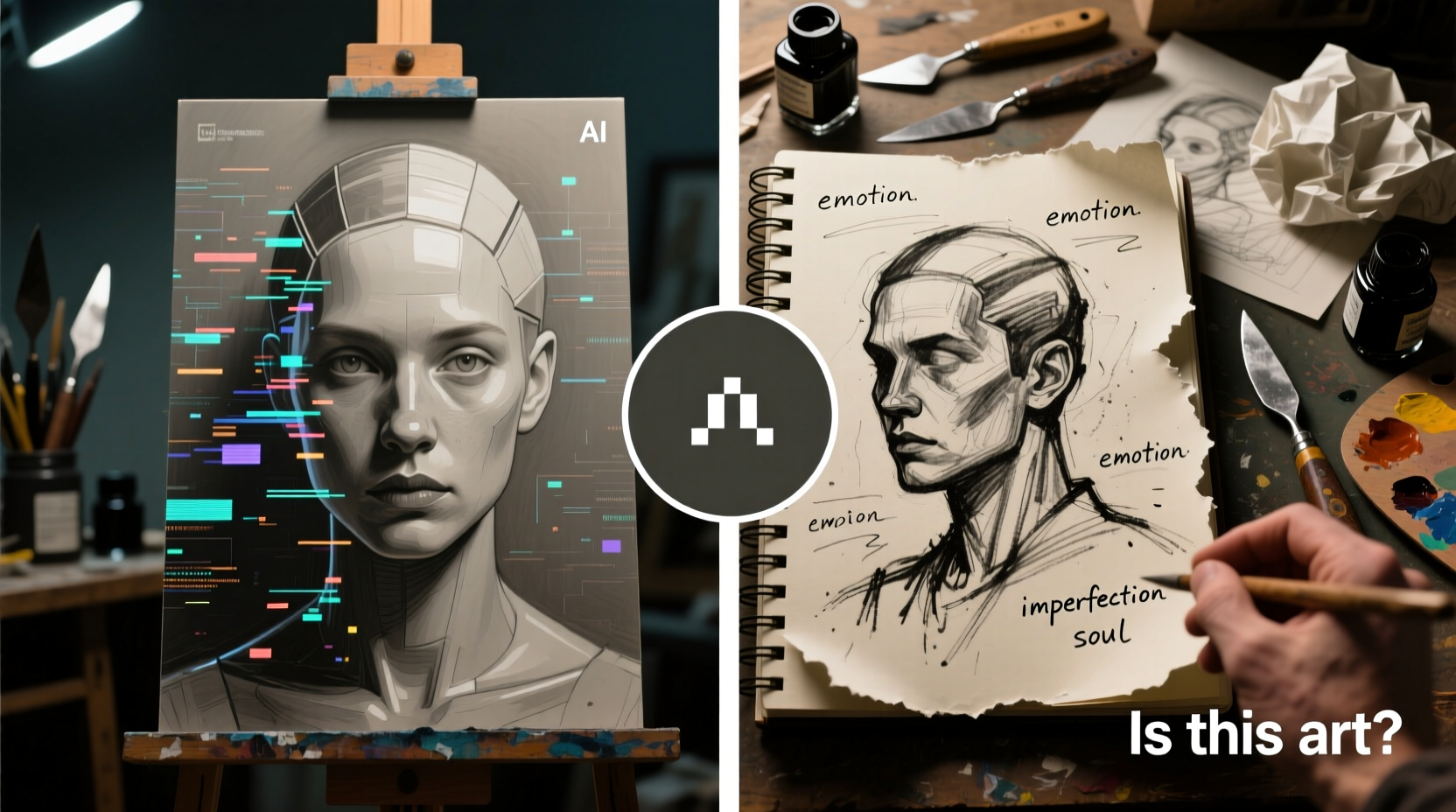

The rise of artificial intelligence in creative fields has sparked both excitement and controversy. While AI-generated art can produce visually stunning images with minimal input, it has also drawn sharp criticism from artists, ethicists, and industry professionals. The question “Why is AI art considered bad?” isn’t about dismissing technological progress—it’s about understanding the real implications behind how these tools are developed, trained, and used. From copyright violations to devaluing human creativity, the concerns are complex and deeply rooted in ethics, labor, and artistic integrity.

Ethical Issues: Training Data and Consent

One of the most pressing criticisms of AI art generators like MidJourney, Stable Diffusion, and DALL-E is their reliance on vast datasets of existing artwork—much of which was scraped from the internet without permission. These models learn by analyzing millions of images, including works by living artists whose styles can be replicated with a simple text prompt.

This raises serious ethical questions. Did the original creators consent to having their work used for training? Were they compensated? In most cases, the answer is no. Artists report discovering that AI tools can generate pieces nearly identical to their signature styles—sometimes even mimicking watermarks or unique brushwork—without any credit or financial benefit to them.

“We didn’t opt in. Our life’s work is being used as fuel for systems that could eventually replace us.” — Sarah Chen, digital illustrator and advocate for artist rights

The lack of transparency in data sourcing makes it nearly impossible for artists to protect their intellectual property. Even when platforms claim to allow artists to \"opt out\" of training datasets, the process is often unclear, ineffective, or implemented only after public backlash.

Devaluation of Human Artistry

AI art challenges long-standing notions of what it means to create. Traditional art requires years of skill development, emotional investment, and personal expression. In contrast, AI can generate polished images in seconds based on prompts like “cyberpunk cat in neon rain, oil painting style.”

While this accessibility democratizes image creation for some, it risks diminishing the perceived value of human-made art. Clients may begin to favor quick, low-cost AI outputs over commissioning original work, especially when budgets are tight. This shift threatens livelihoods, particularly for freelance illustrators, concept artists, and emerging creatives trying to break into competitive industries.

Moreover, AI lacks intent. It cannot experience inspiration, struggle, or cultural context. When a human artist paints a war scene, it may stem from historical awareness, personal trauma, or political commentary. An AI generates similar imagery based on statistical patterns—not meaning. Critics argue that mistaking technical proficiency for artistic depth leads to a hollow creative culture.

Copyright and Legal Ambiguity

The legal landscape surrounding AI-generated art remains murky. Who owns the rights to an image created by AI? Is it the user who wrote the prompt, the developer of the model, or the original artists whose work influenced the output?

In the U.S., the Copyright Office has ruled that AI-generated images lacking human authorship cannot be copyrighted. However, if a human significantly modifies the output, partial protection may apply. This creates uncertainty for both creators and businesses relying on AI art for commercial use.

| Aspect | Human-Created Art | AI-Generated Art |

|---|---|---|

| Copyright Eligibility | Fully eligible | Limited or none (without human modification) |

| Source of Inspiration | Personal experience, culture, emotion | Data patterns from existing artworks |

| Creator Compensation | Direct (sales, commissions) | Indirect (platform subscriptions, prompt engineering) |

| Ethical Sourcing | Controlled by artist | Often unverified or non-consensual |

This legal gray area complicates everything from licensing to infringement claims. For example, if an AI replicates a distinctive artistic style protected under moral rights in certain jurisdictions, can the artist pursue damages? Courts are still grappling with these questions.

Impact on Creative Industries and Jobs

The integration of AI into design workflows has already begun reshaping hiring practices. Some companies now request AI-assisted mockups before commissioning final artwork, reducing the number of paid projects available to human artists. Others use AI to generate stock images, bypassing traditional agencies and photographers altogether.

A mini case study illustrates the concern: A small animation studio in Portland experimented with AI tools to speed up background design. Initially, productivity increased. But within months, two junior concept artists were laid off, and freelance rates dropped as clients expected faster turnarounds at lower costs. The studio’s lead designer noted, “We saved time, but we lost nuance. And we hurt people in the process.”

This pattern echoes broader fears about automation across creative sectors. While AI can assist in ideation or repetitive tasks, its unchecked adoption risks normalizing underpayment, overproduction, and creative homogenization.

Checklist: Ethical Use of AI Art Tools

- Verify whether your AI tool allows artists to opt out of training data

- Avoid generating work in the style of living artists without permission

- Credit human contributors when combining AI with manual editing

- Support platforms that compensate artists or share revenue fairly

- Use AI as a supplement—not a replacement—for original creation

Expert Perspectives and Ongoing Debate

The conversation around AI art isn’t monolithic. Some technologists view it as an inevitable evolution, comparing early resistance to photography or digital painting. Others see it as a threat to artistic sovereignty.

“AI doesn’t replace artists—it replaces tasks. The danger lies in how society chooses to deploy it.” — Dr. Marcus Liu, researcher in AI ethics at MIT

Indeed, AI can be a powerful assistant: helping overcome creative blocks, generating mood boards, or prototyping ideas. The issue arises when it’s used to replicate, commodify, or undercut human creators without accountability.

Organizations like the Authors Guild and the Visual Artists’ Coalition have called for stronger regulations, including mandatory disclosure of AI usage in published works and legal recognition of style protection. Meanwhile, open-source initiatives are exploring ethical training datasets that compensate contributors—a step toward more responsible development.

FAQ

Can I sell AI-generated art legally?

Yes, in most jurisdictions, you can sell AI-generated images. However, you typically cannot claim full copyright unless significant human creative input is involved. Always check platform terms and local laws.

Does using AI art harm real artists?

It depends on how it’s used. When AI replicates styles or replaces paid commissions without compensation to original creators, it contributes to economic and ethical harm. Responsible use respects boundaries and supports—not displaces—human artists.

Are there ethical AI art tools available?

A few emerging platforms, such as those using licensed datasets or offering artist revenue shares, aim to operate more ethically. Examples include Adobe Firefly (which uses content from Adobe Stock) and community-driven models that prioritize consent.

Conclusion: Toward a Balanced Creative Future

The backlash against AI art isn’t a rejection of innovation—it’s a call for responsibility. Technology should empower creators, not exploit them. As AI becomes more embedded in creative workflows, the standards we set today will shape the future of art, ownership, and cultural value.

Artists, developers, and consumers all have a role to play. Demand transparency in training data. Support policies that protect creative labor. And when using AI tools, ask not just “Can I?” but “Should I?”

浙公网安备

33010002000092号

浙公网安备

33010002000092号 浙B2-20120091-4

浙B2-20120091-4

Comments

No comments yet. Why don't you start the discussion?