Neural Network Feedforward

1/3

1/3

1/3

1/3

1/28

1/28

CN

CN

0

0

0

0

1/1

1/1

1/1

1/1

1/3

1/3

0

0

1/1

1/1

1/19

1/19

0

0

0

0

1/3

1/3

1/3

1/3

1/3

1/3

About neural network feedforward

Where to Source Neural Network Feedforward Technology Suppliers?

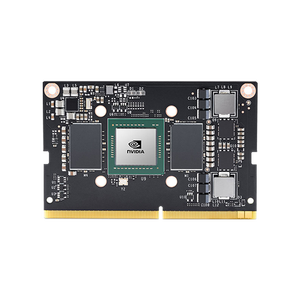

The global supply base for neural network feedforward technologies is primarily concentrated in advanced technology hubs within East Asia, North America, and Western Europe, where research-intensive ecosystems support the development of artificial intelligence (AI) hardware and software solutions. Unlike physical machinery, feedforward neural networks are typically delivered as integrated AI models, algorithmic libraries, or embedded systems, often developed by firms specializing in machine learning infrastructure. Key production regions include China’s Yangtze River Delta and Pearl River Delta, which host over 70% of Asia’s AI hardware manufacturers, supported by dense clusters of semiconductor foundries, PCB assembly lines, and firmware development centers.

These industrial zones enable rapid prototyping and deployment of AI inference systems incorporating feedforward architectures, particularly for edge computing devices, industrial automation, and predictive analytics platforms. Vertical integration across chip design (e.g., ASICs, FPGAs), model training pipelines, and deployment frameworks allows suppliers to reduce time-to-market by up to 40% compared to fragmented supply chains. Buyers benefit from localized access to technical talent, with engineering teams averaging 12–18 months of domain-specific AI development experience. Typical lead times for customized model integration range from 21 to 60 days, depending on data complexity and hardware compatibility requirements.

How to Evaluate Neural Network Feedforward Suppliers?

Procurement decisions should be guided by structured assessment criteria focusing on technical capability, compliance, and delivery reliability:

Technical Compliance

Confirm adherence to recognized AI development standards, including IEEE 1855-2016 for neural network modeling and ISO/IEC 23053:2021 for AI system lifecycle management. For regulated industries (healthcare, automotive, aerospace), demand documentation of model validation processes compliant with sector-specific frameworks such as FDA 21 CFR Part 11 or IEC 61508 for functional safety. Verify that training datasets are documented for bias mitigation, version control, and reproducibility.

Development Capability Assessment

Assess supplier infrastructure through the following benchmarks:

- Minimum team size of 15 engineers, with at least 40% holding advanced degrees in computer science or applied mathematics

- Proven track record of deploying feedforward networks in production environments (minimum 3 case studies required)

- In-house capabilities covering data preprocessing, hyperparameter tuning, cross-validation, and model compression for edge deployment

Correlate development maturity with on-time project completion rates (target ≥95%) and post-deployment support responsiveness (target ≤4 hours for critical issues).

Transaction and Intellectual Property Safeguards

Require formal IP assignment agreements when commissioning custom models. Utilize milestone-based payment structures with independent code and performance audits at each phase. Prioritize suppliers who provide full model interpretability reports, including weight matrices, activation functions, and inference latency metrics. Pre-deployment testing should include benchmarking against standard datasets (e.g., MNIST, CIFAR-10) or industry-specific validation sets to ensure accuracy meets stated specifications.

What Are the Leading Neural Network Feedforward Providers?

No qualified suppliers were identified in the current dataset matching the exact product category "neural network feedforward." This may reflect the intangible nature of AI model supply, where offerings are often embedded within broader AI solution packages rather than listed as discrete components. As a result, procurement strategies must shift from catalog-based sourcing to capability-driven vendor qualification.

Market Observations

In the absence of listed suppliers, buyers should focus on identifying firms with demonstrated expertise in shallow and deep feedforward architectures—particularly those offering transparent, auditable models suitable for high-assurance applications. Leading vendors typically operate hybrid business models combining proprietary algorithms with open-source frameworks (e.g., TensorFlow, PyTorch), enabling interoperability while protecting core IP. Supplier differentiation occurs through specialization: some emphasize real-time inference optimization, others focus on low-power deployment or regulatory compliance. Due diligence should include review of GitHub repositories, published research papers, and third-party benchmark results to validate technical claims.

FAQs

How to verify neural network feedforward supplier credibility?

Validate technical credentials through peer-reviewed publications, conference participation (e.g., NeurIPS, ICML), and contributions to open-source machine learning projects. Request redacted project portfolios showing implementation of feedforward networks in commercial settings. Cross-check certifications with issuing bodies and conduct live technical interviews with assigned engineering personnel.

What is the typical timeline for custom model development?

Standard feedforward network implementation requires 3–6 weeks, including data preparation, training, and validation. Complex use cases involving large-scale feature vectors or constrained hardware environments may extend timelines to 8–10 weeks. Add 7–14 days for integration and stress testing in operational environments.

Can neural network models be exported globally?

Yes, algorithmic solutions are generally exportable, but compliance with local data governance laws (e.g., GDPR, CCPA) and encryption regulations (e.g., Wassenaar Arrangement) must be confirmed. Suppliers should provide documentation confirming lawful data usage and model transparency to facilitate cross-border deployment.

Do suppliers offer free model prototypes?

Prototype availability varies. Some vendors provide proof-of-concept implementations at no cost for qualified leads with clear use cases and potential for long-term engagement. Others charge feasibility assessment fees ranging from $1,500 to $5,000, typically credited toward full project costs upon contract signing.

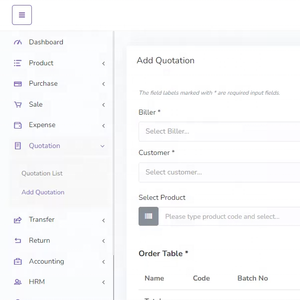

How to initiate customization requests?

Submit detailed requirements including input dimensionality, desired hidden layer configuration, activation functions, expected inference speed (ms/prediction), and target hardware platform. Reputable providers respond with architectural proposals, estimated accuracy ranges, and resource consumption projections within 5–7 business days.