Machine Learning Applications

CN

CN

1/3

1/3

1/3

1/3

1/2

1/2

1/3

1/3

0

0

1/7

1/7

1/3

1/3

1/3

1/3

1/17

1/17

1/3

1/3

1/3

1/3

1/3

1/3

1/3

1/3

1/3

1/3

1/3

1/3

1/3

1/3

1/2

1/2

1/3

1/3

1/3

1/3

1/1

1/1

About machine learning applications

Where to Find Machine Learning Applications Suppliers?

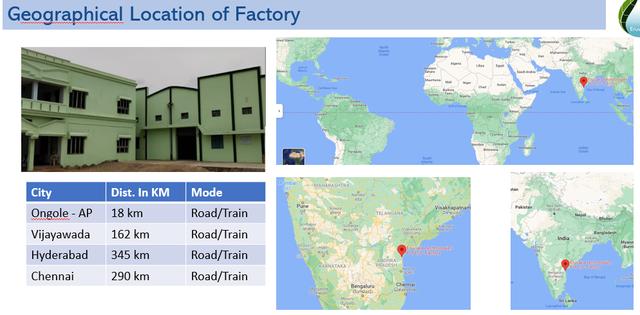

The global supplier landscape for machine learning applications is decentralized and technology-driven, with leading development hubs concentrated in North America, Western Europe, and East Asia. Unlike traditional manufacturing sectors, production of ML applications centers on software innovation rather than physical infrastructure, with core capabilities anchored in specialized R&D teams, algorithmic expertise, and cloud integration proficiency.

Key regions such as Silicon Valley (USA), Berlin (Germany), and Shenzhen (China) host dense ecosystems of AI engineering talent, venture capital, and data infrastructure that accelerate product development cycles. These clusters offer access to advanced computational resources—such as GPU farms and distributed training frameworks—and benefit from proximity to academic institutions and tech incubators. Suppliers based in these zones typically demonstrate faster time-to-market for customized ML solutions, supported by mature DevOps pipelines and continuous integration/continuous deployment (CI/CD) environments.

Buyers gain strategic advantages through engagement with suppliers operating within regulated data governance frameworks (e.g., GDPR-compliant entities in the EU), which facilitate smoother integration into enterprise IT systems. Regional specialization also influences application focus: North American firms often lead in predictive analytics and NLP, while Asian developers emphasize computer vision and real-time inference optimization for industrial automation.

How to Choose Machine Learning Applications Suppliers?

Prioritize these verification protocols when selecting partners:

Technical Compliance & Standards Adherence

Verify adherence to recognized software quality and security benchmarks, including ISO/IEC 27001 for information security management and SOC 2 Type II for data handling integrity. For regulated industries (healthcare, finance), confirm compliance with domain-specific standards such as HIPAA or PCI DSS. Demand documentation of model validation processes, including bias testing, accuracy metrics (precision, recall, F1-score), and reproducibility logs.

Development Capability Assessment

Evaluate technical infrastructure and human capital:

- Minimum team size of 15 full-stack and ML engineers with proven experience in TensorFlow, PyTorch, or equivalent frameworks

- Proven track record of deploying models in production environments (minimum 3 live implementations verifiable via case studies)

- In-house data annotation, preprocessing, and MLOps pipeline capabilities

Cross-reference GitHub repositories, published research papers, or API sandbox access to validate technical depth and code maintainability.

Transaction Safeguards & IP Protection

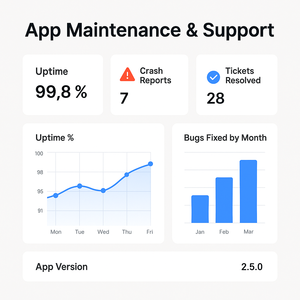

Require contractual stipulations on intellectual property ownership, model explainability, and data confidentiality. Utilize milestone-based payment structures tied to deliverables such as model training completion, performance benchmarking, and system integration. Conduct third-party code audits prior to final deployment. Insist on SLAs guaranteeing model retraining cycles, uptime (>99.5%), and response times for incident resolution.

What Are the Best Machine Learning Applications Suppliers?

| Company Name | Location | Years Operating | Staff | Core Expertise | On-Time Delivery | Avg. Response | Ratings | Reorder Rate |

|---|---|---|---|---|---|---|---|---|

| Supplier data not available | ||||||||

Performance Analysis

Due to the absence of specific supplier data, procurement decisions must rely on structured evaluation frameworks rather than comparative metrics. Historically, established vendors with over five years of operational history demonstrate higher delivery reliability and stronger post-deployment support. Emerging boutique firms often differentiate through niche specializations—such as edge AI or federated learning—but may lack scalability for enterprise-wide rollouts. Prioritize suppliers with documented experience in your target use case (e.g., demand forecasting, anomaly detection, sentiment analysis) and transparent model lifecycle management practices. Video walkthroughs of development workflows and direct interviews with lead data scientists are recommended before contract finalization.

FAQs

How to verify machine learning applications supplier reliability?

Validate technical claims through proof-of-concept (PoC) trials limited to non-critical workflows. Request anonymized client references and review past project outcomes, focusing on model drift management, scalability under load, and integration complexity. Confirm participation in recognized AI ethics initiatives or open-source contributions as indicators of long-term credibility.

What is the average development timeline for custom ML applications?

Standard implementation requires 8–12 weeks, covering data ingestion, feature engineering, model training, and API integration. Complex systems involving multimodal inputs or real-time inference may extend to 20 weeks. Allow additional 2–4 weeks for user acceptance testing and compliance reviews in regulated sectors.

Can suppliers deploy machine learning models globally?

Yes, cloud-agnostic suppliers support deployment across AWS, Azure, and Google Cloud Platform, enabling regional hosting to meet data residency requirements. Confirm containerization (Docker/Kubernetes) and CI/CD compatibility for seamless integration into existing IT architectures. Edge deployment options should be verified separately for IoT or low-latency applications.

Do vendors provide free pilot programs?

Pilot policies vary. Some suppliers offer limited-scope PoCs at no cost for qualified enterprises committing to subsequent full deployment. Others charge nominal setup fees (typically $2,000–$5,000) applied toward the final contract value. Budget accordingly based on solution complexity and data readiness.

How to initiate customization requests?

Submit detailed functional specifications including input data types (structured/unstructured), expected output format, latency constraints (<100ms, <1s, etc.), and integration endpoints (REST API, Kafka, etc.). Reputable providers return technical feasibility assessments within 5 business days and prototype demonstrations within 3 weeks.